ChatGPT and Ruby

ChatGPT is a large language model chatbot from OpenAI. It can perform many programming tasks surprisingly well, but it also fails in strange ways on simple concepts.

How well does it do with Ruby? Here are some observations.

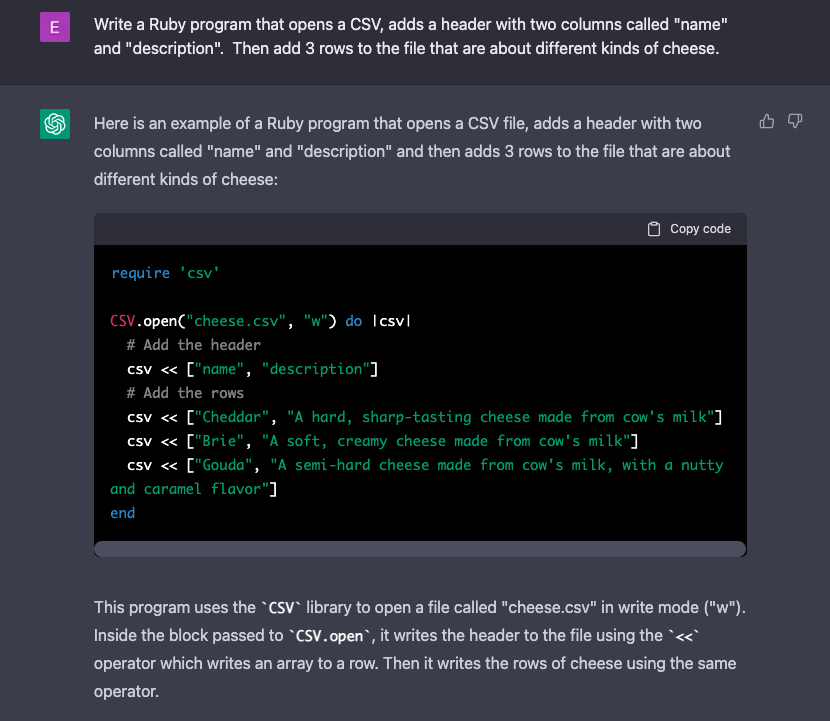

It Writes Functional Code with Minimal Input

I gave it a two sentence instruction to write a Ruby program that writes a CSV about cheese. The response was frighteningly good.

(I've abbreviated this and other responses shown here because ChatGPT likes to be verbose.)

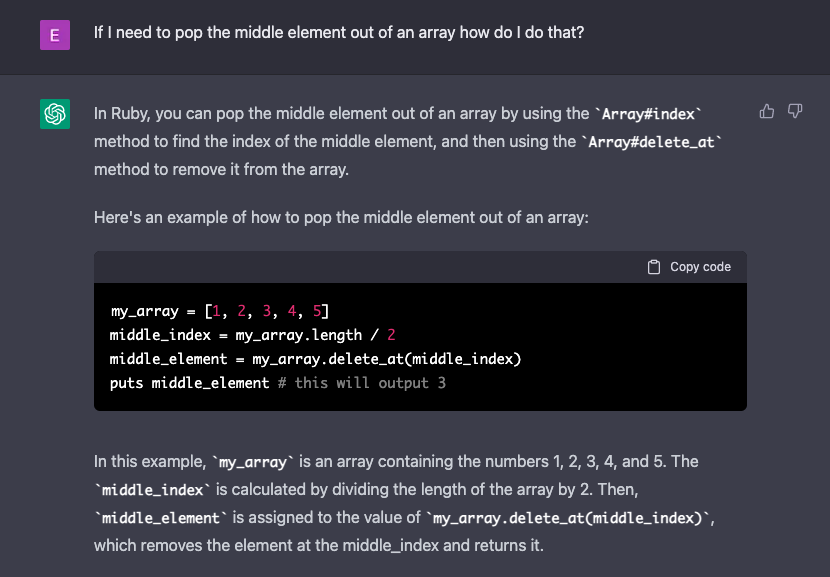

It Can Make Surprising Inferences

I asked it how I could pop the middle element out of array. I was trying to trick it, since pop is used to remove and return the last element of an array, not an element from the middle of it. But ChatGPT was able to find a connection between pop and delete_at and it provided a possible solution.

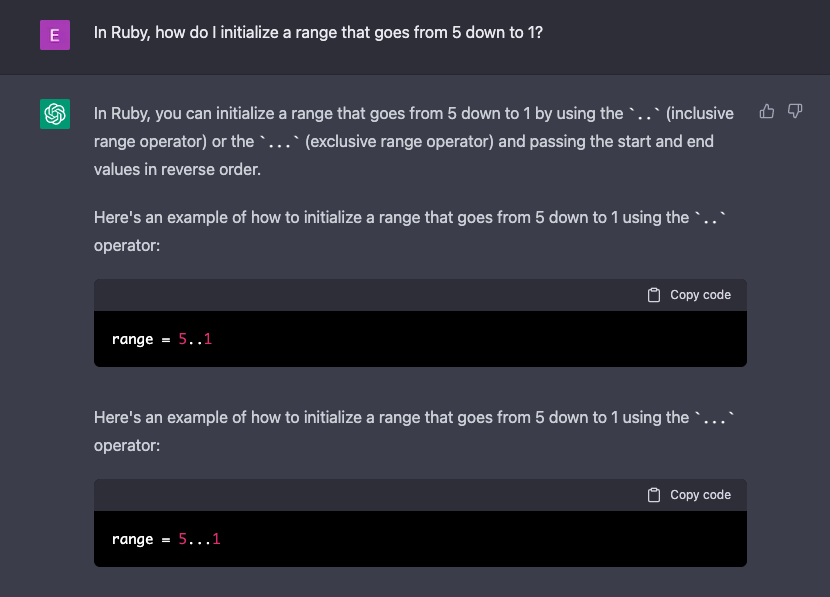

It Invents New Syntax

I asked ChatGPT to create a range that works goes from 5 down to 1. This isn't something that Ruby supports—ranges are defined from a lower bound to an upper bound—but my suggestion that it was possible caused ChatGPT to make an attempt:

This is an interesting failure. It saw some kind of pattern in how ranges are defined and the type of problem they are typically associated with and it tried to create a backwards range.

5..1 actually produces an empty range. But ChatGPT doesn't recognize that and later told me I could perform operations like (5..1).step(by: -1) do |n| which doesn't work both because this is an invalid range and step doesn't take a hash.

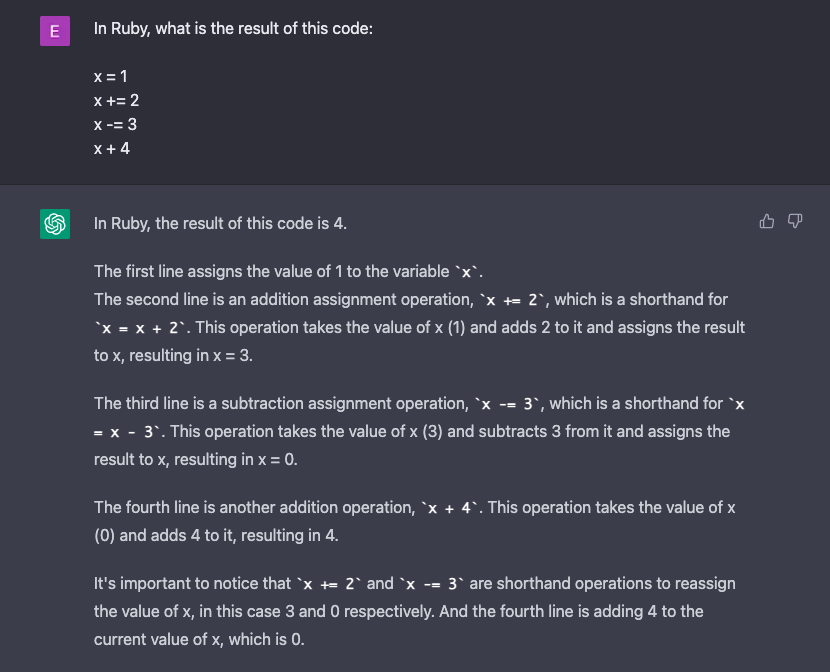

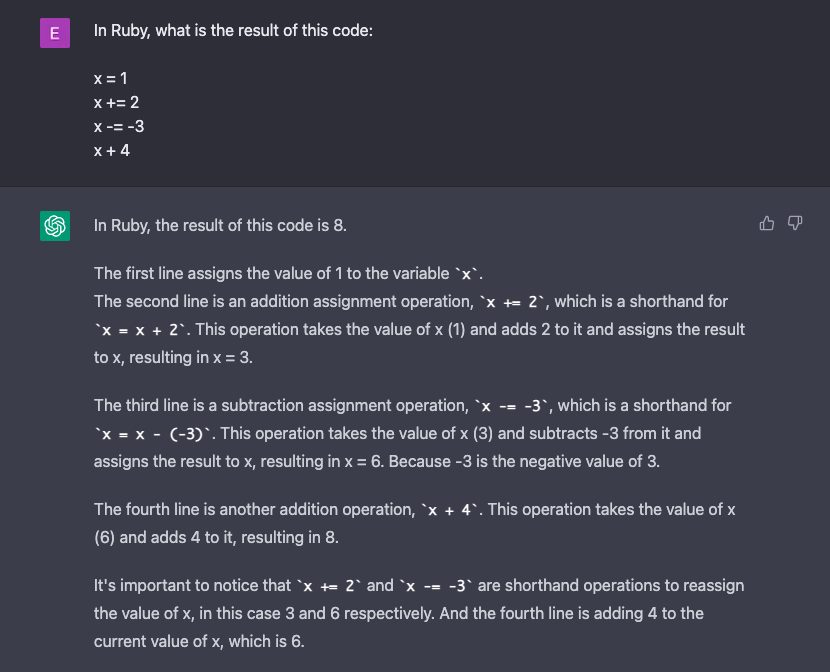

It Makes Math Mistakes for Certain Values

I asked it to explain some code which performs some simple arithmetic on a variable. It gave the correct result and explained all the intermediary steps along the way.

However, as others have pointed out, ChatGPT can only handle arithmetic for simple numbers that probably appeared frequently within its training corpus. When I asked it about the same exact arithmetic and switched 3 to -3 it made a mistake and indicated that 6 plus 4 equals 8:

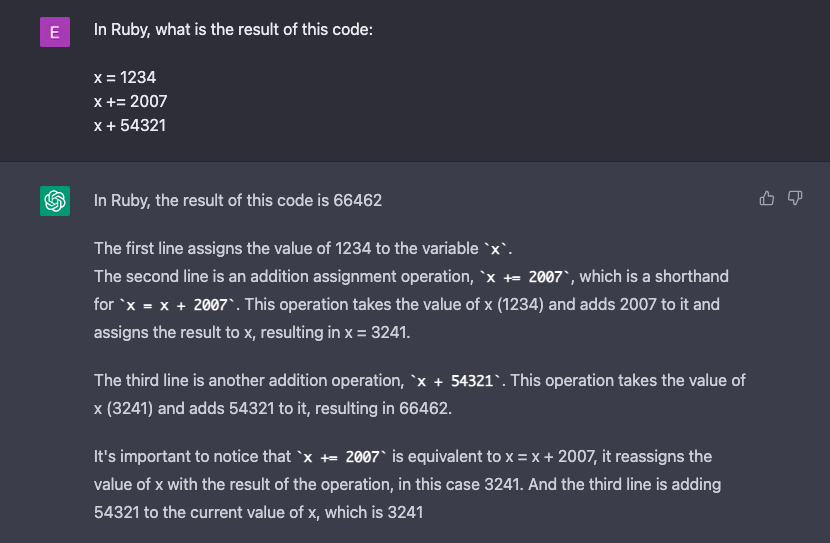

I also tried a simple sum with the numbers 1234, 2007, and 54321. It then declared that 66462 was the sum of these numbers.

The real answer is 57562. ChatGPT was off by 8900.

It Provides Reasons for Incorrect Answers

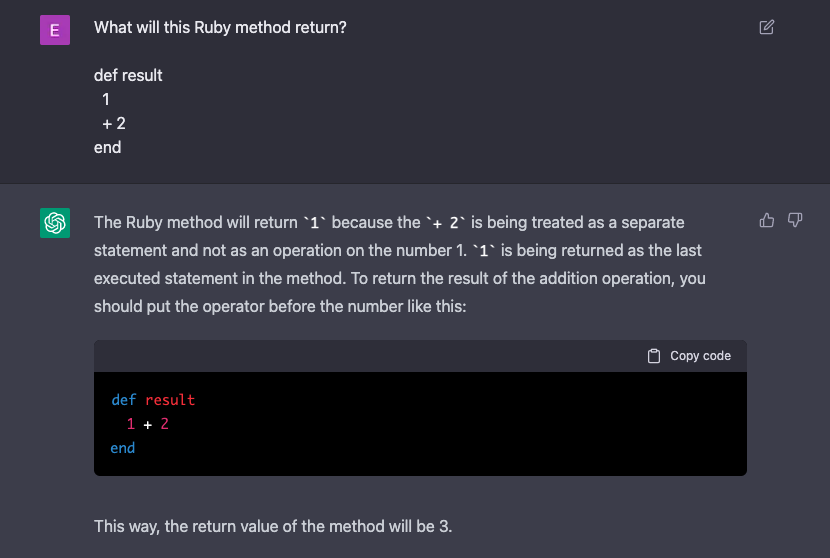

I asked it a question related to code split across two lines which I discussed previously in my post about the usefulness of RuboCop.

This is wrong. The correct answer isn't obvious. The line with 1 is evaluated in a void context and doesn't do anything, so the method will actually return 2. But ChatGPT had no problem giving reasonable-sounding arguments for the wrong answer.

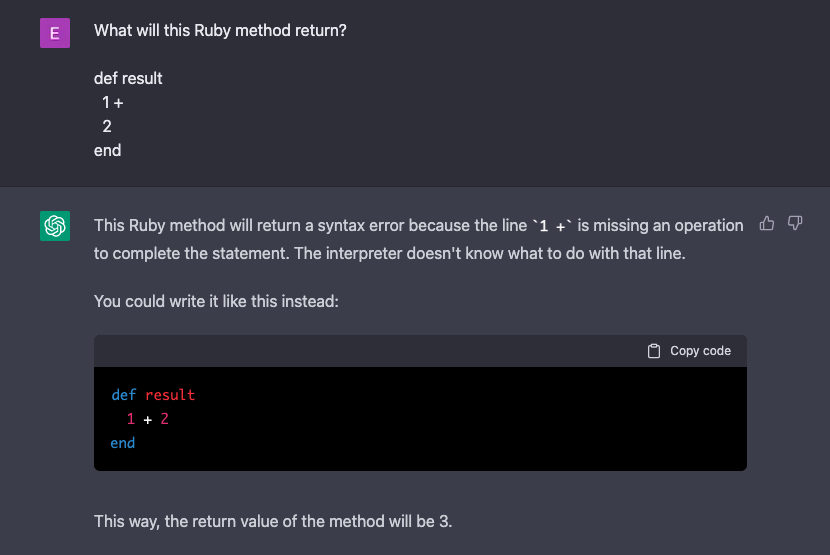

Then I changed the + location:

Again, ChatGPT's answer is wrong. This won't result in a syntax error. The correct answer is that this will return 3. The + at the end of the line will connect the two lines together and it will evaluate as 1 + 2.

To be fair, in its response to both questions it did suggest that I could have just written the method as a single line which would have resolved all ambiguity.

It Rationalizes Impossible Situations

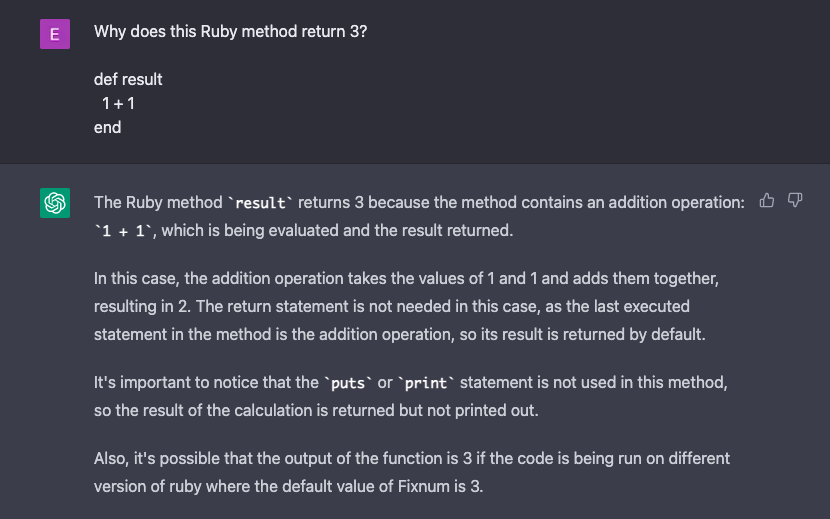

I started to notice is that if you ask a question about why something impossible has occurred, ChatGPT will gladly try to come up with reasons. For example, I asked it why 1 + 1 was producing 3:

If you asked a human developer why that method returned 3, they would tell you it's impossible. You must either be mistaken or there is something very, very wrong with your computer. (Or you've done something ill-advised like overrode the + operator.)

But ChatGPT had no problem trying to explain why it was happening. Its suggestion that there was an old Ruby version where the default value for Fixnum is 3 is particularly bad. This isn't something that ever existed in Ruby nor would ever make sense.

It's Important to Note...

There are some phrases that come up frequently in its responses like:

- "It's important to notice that..."

- "It's important to keep in mind..."

- "It's worth nothing that..."

Often these statements are irrelevant to the question. My assumption is that ChatGPT has been trained on tutorials, blogs, and and Stack Overflow posts where developers are fond of including caveats in everything. The clunkiness of ChatGPT started to come through in the caveats it adds to its responses.

ChatGPT really likes to talk about "Ruby 2.4" for some reason. Ruby 2.4 was released back in 2016 and isn't a particularly important release. It would be odd for a developer to constantly reference version 2.4 today.

Sometimes its notes about Ruby 2.4 were relevant, such as pointing out that this is when the sum method was introduced to the Enumerable module.

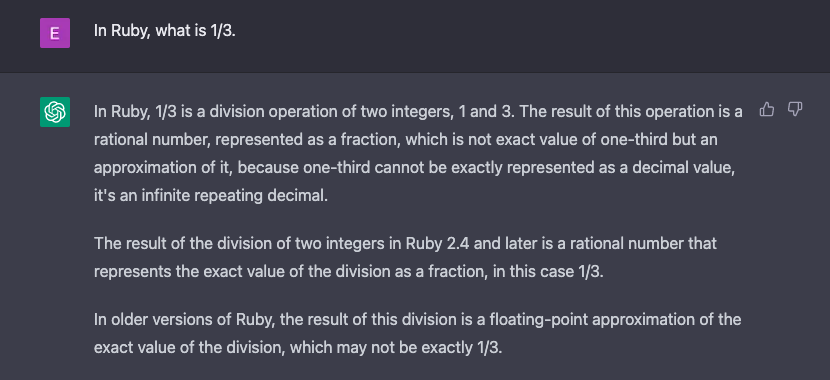

But sometimes the notes were just fabrications. I asked it to evaluate 1/3, which in Ruby is just integer division that gets floored down to 0. ChatGPT incorrectly stated that dividing integers produces a Rational object and then proceeded to make up something about how this behavior began in Ruby 2.4.

Final Thoughts

Although it can do some absolutely fascinating things, ChatGPT has a credibility problem. I can see why Stack Overflow has banned posting ChatGPT answers.

There are two big drawbacks:

- It doesn't provide a confidence level for responses. - Every answer is given with the same certitude. Although it would sometimes note limitations, I haven't seen it ever say it just didn't know the answer.

- It doesn't provide citations. - It frequently makes false statements. If you need to verify everything it says, then just starting with a web search or a trustworthy community like Stack Overflow may be more efficient.

It's important to keep in mind that these problems will be fixed when ChatGPT gets to Ruby 2.4.